Published 06/2022

Genre: eLearning | MP4 | Video: h264, 1280×720 | Audio: AAC, 44.1 KHz

Language: English | Size: 1.96 GB | Duration: 79 lectures • 6h 30m

Creates ETL pipelines and extract data from the web.

What you’ll learn

Creates ETL pipelines

Extract data from the web with Python

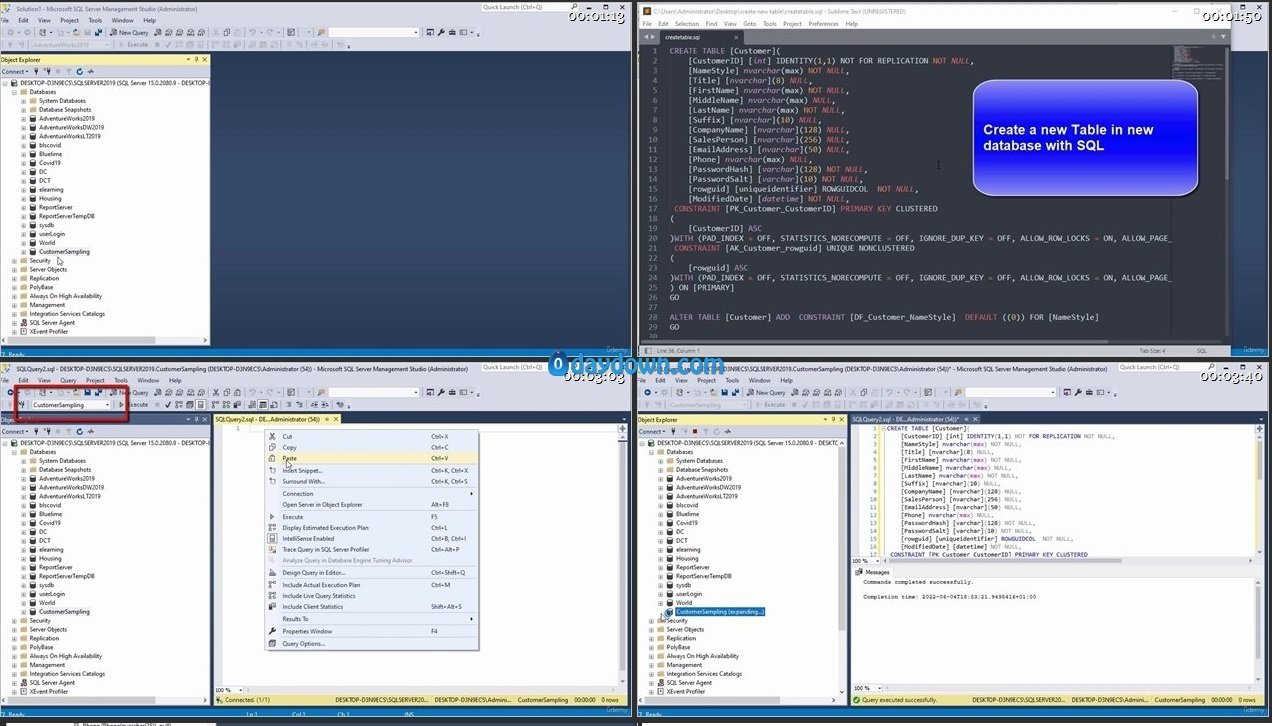

Create SSIS Package

Execute SSIS Package

Build Web Scraping Script

Prototype web scraping script

Configure data source and data destination

Clean and Transform Data

Perform Data migration from SQL Server to Oracle

Requirements

Basic knowledge of Python advised

Basic knowledge of database concepts advised

Description

A data engineer is someone who creates big data ETL pipelines, and makes it possible to take huge amounts of data and translate it into insights. They are focused on the production readiness of data and things like formats, resilience, scaling, and security.

SQL Server Integration Services is a component of the Microsoft SQL Server database software that can be used to perform a broad range of data migration tasks. SSIS is a platform for data integration and workflow applications. It features a data warehousing tool used for data extraction, transformation, and loading .

ETL, which stands for extract, transform and load, is a data integration process that combines data from multiple data sources into a single, consistent data store that is loaded into a data warehouse or other target system.

An ETL pipeline is the set of processes used to move data from a source or multiple sources into a database such as a data warehouse or target databases.

SQL Server Integration Service (SSIS) provides an convenient and unified way to read data from different sources (extract), perform aggregations and transformation (transform), and then integrate data (load) for data warehousing and analytics purpose. When you need to process large amount of data (GBs or TBs), SSIS becomes the ideal approach for such workload.

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. The web scraping software may directly access the World Wide Web using the Hypertext Transfer Protocol or a web browser. While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis.

Who this course is for

Beginners to Data Engineering

转载请注明:0daytown » Data Engineering – SSIS/ETL/Pipelines/Python/Web Scraping

Password/解压密码www.tbtos.com

Password/解压密码www.tbtos.com