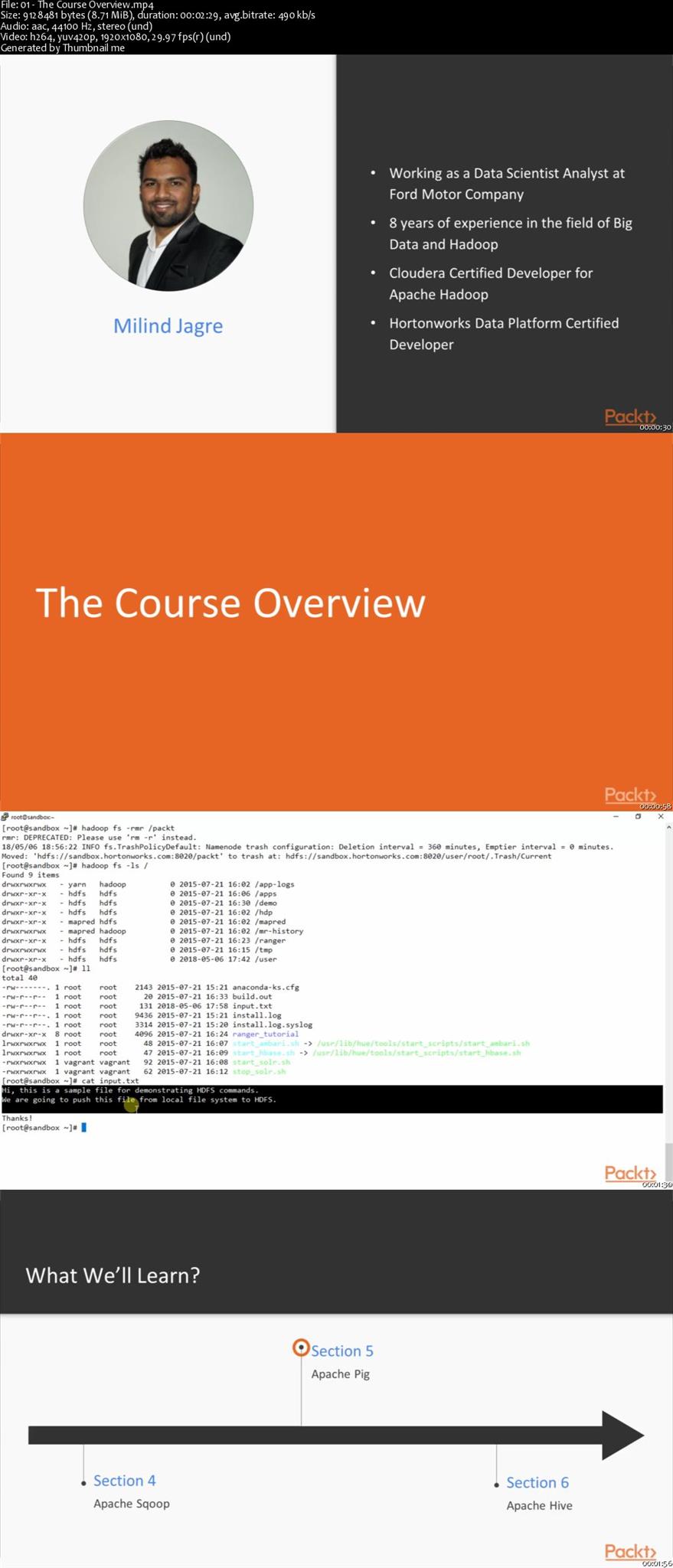

Do you struggle to store and handle big data sets? This course will teach to smoothly handle big data sets using Hadoop 3.

The course starts by covering basic commands used by big data developers on a daily basis. Then, you’ll focus on HDFS architecture and command lines that a developer uses frequently. Next, you’ll use Flume to import data from other ecosystems into the Hadoop ecosystem, which plays a crucial role in the data available for storage and analysis using MapReduce. Also, you’ll learn to import and export data from RDBMS to HDFS and vice-versa using SQOOP. Then, you’ll learn about Apache Pig, which is used to deal with data using Flume and SQOOP. Here you’ll also learn to load, transform, and store data in Pig relation. Finally, you’ll dive into Hive functionality and learn to load, update, delete content in Hive.

By the end of the course, you’ll have gained enough knowledge to work with big data using Hadoop. So, grab the course and handle big data sets with ease.

The code bundle for this course is available at https://github.com/PacktPublishing/Hands-On-Beginner-s-Guide-on-Big-Data-and-Hadoop-3-.

Password/解压密码-0daydown

Download rapidgator

https://rg.to/file/1d39e933d5f990a250127e19a31edf84/Hands-On_Beginner’s_Guide_on_Big_Data_and_Hadoop_3.part1.rar.html

https://rg.to/file/70cc0d150350ffba2f6cade1dd0a6dad/Hands-On_Beginner’s_Guide_on_Big_Data_and_Hadoop_3.part2.rar.html

Download 百度云

你是VIP 1个月(1 month)赞助会员,

转载请注明:0daytown » Hands-On Beginner’s Guide on Big Data and Hadoop 3